Tensorflow版本(# 2017-06-24):1.2.0Python版本:3.5.3- 包括:

Tensorboard可视化tfdbg调试- 常用的高级函数

一、TensorBoard 可视化

1、可视化计算图

- 全部代码:点击查看

- 数据集使用

MNIST手写数字 - 加载数据

|

|

(1) 全连接网络

- 超参数

|

|

定义添加一层的函数

num_layer指定是第几层activation指定激励函数,若不指定跳过1234567891011121314'''定义添加一层'''def add_fully_layer(inputs, input_size, output_size, num_layer, activation=None):with tf.name_scope('layer_'+num_layer):with tf.name_scope('Weights'):W = tf.Variable(initial_value=tf.random_normal(shape=[input_size, output_size]), name='W')with tf.name_scope('biases'):b = tf.Variable(initial_value=tf.zeros(shape=[1, output_size]) + 0.1, name='b')with tf.name_scope('Wx_plus_b'):Wx_plus_b = tf.matmul(inputs, W) + bif activation is not None:outputs = activation(Wx_plus_b)else:outputs = Wx_plus_breturn outputs

定义输入,计算图结构,loss和优化器

|

|

- 定义

Session和tf.summary.FileWriter

|

|

最后在

logs的上级目录打开命令行输入:tensorboard --logdir=logs/,浏览器中输入网址:http://localhost:6006即可查看结果

- 自定义的

cross_entropy = -tf.reduce_sum(y*tf.log(predictions), reduction_indices=[1])

- 使用

tensorflow中自带的cross_entropy = tf.nn.softmax_cross_entropy_with_logits(labels=y,logits=predictions)

- 自定义的

- 可以看出

tf.name_scope定义的名字就是其中的方框,点击里面的可以查看里面对应的内容

(2) CNN卷积神经网络

添加一层卷积层和

pooling层- 这里默认

pooling使用maxpooling, 大小为212345678910111213'''CNN 定义添加一层卷积层,包括pooling(使用maxpooling, size=2)'''def add_conv_layer(inputs, filter_size, input_channels, output_channels, num_layer, activation=tf.nn.relu):with tf.name_scope('conv_layer_'+num_layer):with tf.name_scope('Weights'):Weights = tf.Variable(tf.truncated_normal(stddev=0.1, shape=[filter_size, filter_size, input_channels, output_channels]), name='W')with tf.name_scope('biases'):b = tf.Variable(tf.constant(0.1, shape=[output_channels]))with tf.name_scope('conv2d'):conv2d_plus_b = tf.nn.conv2d(inputs, Weights, strides=[1,1,1,1], padding='SAME', name='conv') + bactivation_conv_outputs = activation(conv2d_plus_b)with tf.name_scope('max_pool'):max_pool_outputs = tf.nn.max_pool(activation_conv_outputs, ksize=[1,2,2,1], strides=[1,2,2,1], padding='SAME')return max_pool_outputs

- 这里默认

将卷积层展开

- 返回展开层和数量(因为全连接会用到)123456789'''将卷积层展开'''def flatten_layer(layer):'''@param layer: the conv layer'''layer_shape = layer.get_shape() # 获取形状(layer_shape == [num_images, img_height, img_width, num_channels])num_features = layer_shape[1:4].num_elements() # [1:4] 是最后3个维度,就是展开的长度layer_flat = tf.reshape(layer, [-1, num_features]) # 展开return layer_flat, num_features

- 返回展开层和数量(因为全连接会用到)

定义输入

- 需要将

x转成图片矩阵的形式12345'''placehoder'''with tf.name_scope('inputs'):x = tf.placeholder(tf.float32, shape=[None, img_flatten_size], name='x')y = tf.placeholder(tf.float32, shape=[None, num_classes], name='y')x_image = tf.reshape(x, shape = [-1, img_size, img_size, n_channels], name='x_images')

- 需要将

定义计算图结构

|

|

- 结果

CNN总结构

- 第一层卷积和

pooling内部结构

(3) RNN_LSTM循环神经网络

声明

placeholder- 图片中每一行当做当前的输入,共有

n_steps=28步遍历完一张图片,所以输入x的shape=(batch_size, n_steps, n_inputs) n_inputs就是一行的像素值12345'''placehoder'''with tf.name_scope('inputs'):'''RNN'''x = tf.placeholder(tf.float32, shape=[batch_size, n_steps, n_inputs], name='x')y = tf.placeholder(tf.float32, shape=[batch_size, num_classes], name='y')

- 图片中每一行当做当前的输入,共有

添加一层

cell- 我们最后只需要遍历

n_steps之后的输出即可(遍历完一张图然后分类),所以对应的是final_state[1](有两个state, 一个是c state,一个是h state, 输出是h state) - 12345678910111213'''RNN 添加一层cell'''def add_RNN_Cell(inputs):with tf.name_scope('RNN_LSTM_Cell'):with tf.name_scope('weights'):weights = tf.Variable(tf.random_normal(shape=[state_size, num_classes]), name='W')with tf.name_scope('biases'):biases = tf.Variable(tf.constant(0.1, shape=[num_classes,]), name='b')cell = tf.nn.rnn_cell.BasicLSTMCell(num_units=state_size)init_state = cell.zero_state(batch_size, dtype=tf.float32)rnn_outputs, final_state = tf.nn.dynamic_rnn(cell=cell, inputs=x,initial_state=init_state)logits = tf.matmul(final_state[1], weights) + biasesreturn logits

- 我们最后只需要遍历

网络结果和

loss

|

|

- 结果

- 总体结构

RNN内部结构

- 总体结构

2、可视化训练过程

- 全部代码:点击查看

(1) 权重

weights,偏置biases,损失值loss 加入一层全连接层的函数变成这样

- 加入

tf.summary.histogram(name=layer_name+'/Weights', values=W)即可123456789101112131415161718192021222324'''定义添加一层全连接层'''def add_fully_layer(inputs, input_size, output_size, num_layer, activation=None):layer_name = 'layer_' + num_layerwith tf.name_scope(layer_name):with tf.name_scope('Weights'):low = -4*np.sqrt(6.0/(input_size + output_size)) # use 4 for sigmoid, 1 for tanh activationhigh = 4*np.sqrt(6.0/(input_size + output_size))#'''xavier方法初始化'''##sigmoid#Weights = tf.Variable(tf.random_uniform(shape=[input_size, output_size], minval=low, maxval=high, dtype=tf.float32), name='W')##reluW = tf.Variable(initial_value=tf.random_uniform(shape=[input_size, output_size], minval=low, maxval=high, dtype=tf.float32)/2, name='W')tf.summary.histogram(name=layer_name+'/Weights', values=W) # summary.histogramwith tf.name_scope('biases'):b = tf.Variable(initial_value=tf.zeros(shape=[1, output_size]) + 0.1, name='b')tf.summary.histogram(name=layer_name+'/biases', values=b) # summary.histogramwith tf.name_scope('Wx_plus_b'):Wx_plus_b = tf.matmul(inputs, W) + bif activation is not None:outputs = activation(Wx_plus_b)else:outputs = Wx_plus_btf.summary.histogram(name=layer_name+'/outputs', values=outputs) # summary.histogramreturn outputs

- 加入

损失

- 损失因为是个具体的数,所以使用

scalar(上面的权重和偏置都是矩阵,向量)1tf.summary.scalar(name='loss_value', tensor=losses)

- 损失因为是个具体的数,所以使用

训练时

mergemerged = tf.summary.merge_all()合并所有的summary- 对于

loss, 训练时执行merge, 然后随步数不断加入merged_result = sess.run(merged, feed_dict=feed_dict_train) # 执行mergedwriter.add_summary(summary=merged_result, global_step=i)123456789101112131415'''训练'''def optimize(n_epochs):with tf.Session() as sess:merged = tf.summary.merge_all()writer = tf.summary.FileWriter('logs', sess.graph) # 将计算图写入文件sess.run(tf.global_variables_initializer())for i in range(n_epochs):batch_x, batch_y = data.train.next_batch(batch_size)feed_dict_train = {x: batch_x, y: batch_y}sess.run(train_step, feed_dict=feed_dict_train)if i % 20 == 0:print("epoch:{0}, accuracy:{1}".format(i, sess.run(accuracy, feed_dict=feed_dict_train)))merged_result = sess.run(merged, feed_dict=feed_dict_train) # 执行mergedwriter.add_summary(summary=merged_result, global_step=i) # 加入到writeroptimize(1001)

结果

loss

- 权重和偏置的数据分布

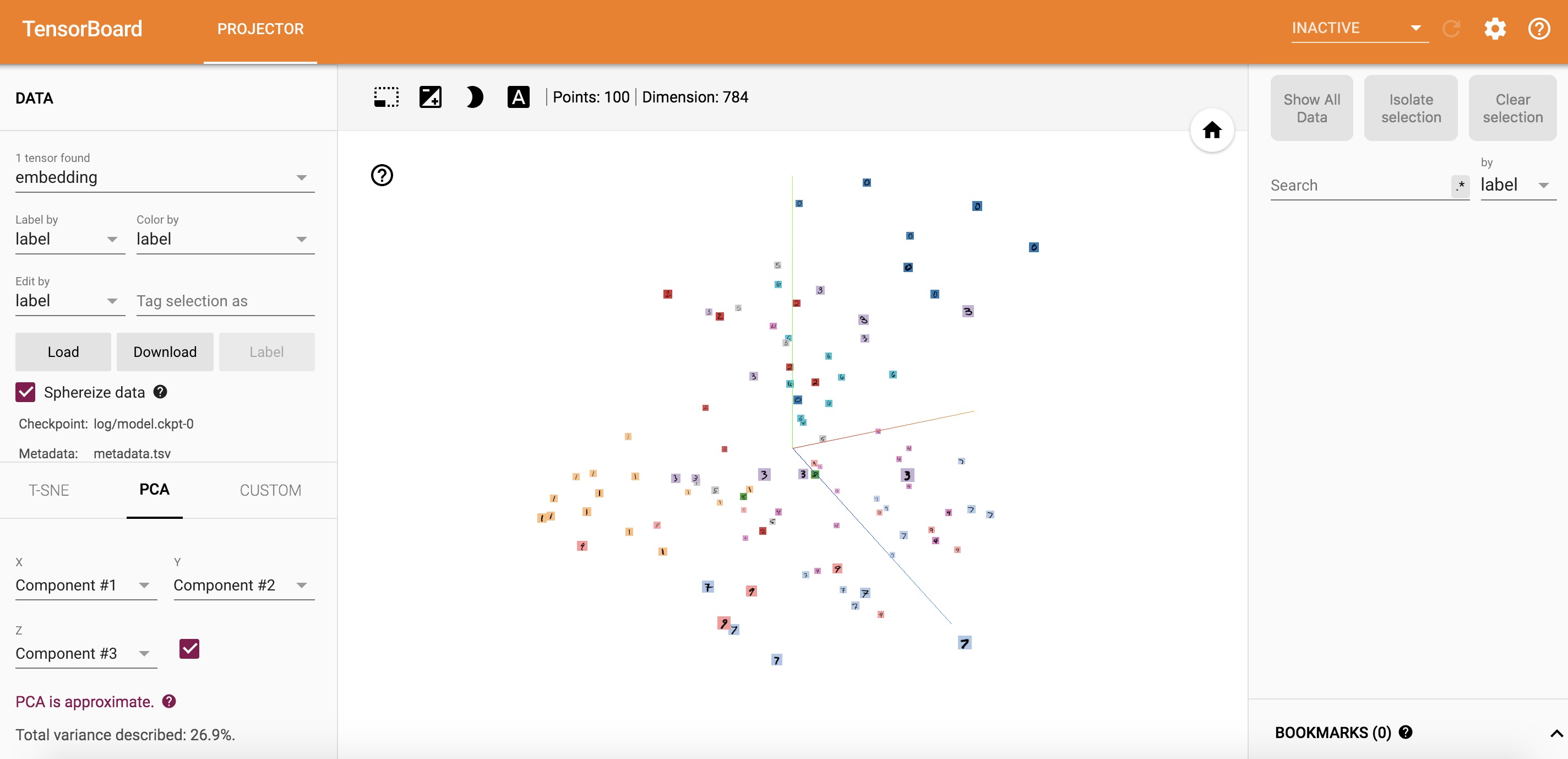

3、可视化embedding

- 全部代码: 点击查看

|

|

- 结果

二、Tensorflow 调试tfdbg

(1) 加入代码

- 导入调试的包:

from tensorflow.python import debug as tf_debug Wrapper Session和添加filter:filter也可以自己定义123with tf.Session() as sess:sess = tf_debug.LocalCLIDebugWrapperSession(sess)sess.add_tensor_filter(filter_name='inf or nan', tensor_filter=tf_debug.has_inf_or_nan)

(2) 运行

- 命令行中执行:

python xxx.py --debug即可进入调试- 支持鼠标点击的可以直接点击查看变量的信息

run或者r可以查看所有的tensor的名字等信息- 第一次

run还没有初始化变量,pt tenser_name打印tensor的信息

- 第一次

- 再执行一次就是初始化变量

- 可以进行

slice

- 可以进行

- 更多命令行

- 本文链接: http://lawlite.me/2017/06/24/Tensorflow学习-工具相关/

-

版权声明:

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 3.0 许可协议

。转载请注明出处!

。转载请注明出处!